Mae Akins Roth - Peeking At Model Accuracy

Have you ever thought about how our clever digital systems figure out if they are doing a good job? It's a bit like a chef tasting their dish to see if it's just right. When we talk about something like Mae Akins Roth, we are really looking at a way of thinking about how these systems measure their own performance and how they learn. It’s about getting a good sense of how close their guesses are to what's actually true.

You see, sometimes a system might make a guess, and that guess could be a little off, or it could be very, very off. Knowing the difference between those two things is quite important. This whole idea of Mae Akins Roth, in a way, helps us sort through those guesses, figuring out which ones are pretty close and which ones might need a lot more work. It’s like having a special ruler for how well our computer models are doing their tasks.

So, we're going to explore some really neat ideas that help us peek behind the curtain of these smart systems. We'll look at how they learn from pictures, how they handle long stretches of words, and even how they figure out what they need to know without being told every single detail. It’s all part of what makes these digital helpers so clever, and Mae Akins Roth, in some respects, gives us a nice name to put on these explorations.

Table of Contents

- What is "mae akins roth" Anyway?

- Where Does "mae akins roth" Fit in Language Models?

- Why Does "mae akins roth" Matter for Learning?

- "mae akins roth" - Looking at Model Performance

What is "mae akins roth" Anyway?

When we talk about "mae akins roth," we are really talking about a set of ideas that help us measure how well a computer model predicts things. Think about it like this: if you try to guess how many apples are in a basket, and you say ten, but there are actually nine, that's a small difference. If you say a hundred, that's a very big difference. Computer models have similar moments where they guess, and we need ways to check their work. One way we do this is with something called Mean Absolute Error, or MAE for short. It's a way to measure the average size of the mistakes, without caring if the guess was too high or too low, just how far off it was. It's a pretty straightforward way to look at things, you know, very direct.

There's also a close cousin to MAE called Mean Absolute Percentage Error, or MAPE. This one takes those errors and turns them into percentages. So, instead of saying "it was off by two apples," it says "it was off by twenty percent." This can be super helpful because it means that a mistake of two apples in a basket of ten feels different than a mistake of two apples in a basket of a thousand. The percentage approach makes it easier to compare how well models are doing, especially when the things they are guessing about can be very different in size. It's almost like a universal scale for errors, in a way.

The Heart of "mae akins roth" - Getting a Sense of Prediction Errors

So, the core idea behind part of "mae akins roth" is about getting a clear picture of how much a model's prediction differs from the real value. The MAE approach is quite simple. It adds up all the absolute differences between what the model predicted and what actually happened, and then it just divides by the number of predictions. This gives us a single number that tells us, on average, how far off the model was. It's a very clear way to see if the model is generally close or generally far away from the truth, that is something to consider.

Now, MAPE builds on this by looking at the proportion of the error to the actual value. This means it cares not just about how big the error is, but how big it is compared to what it was supposed to be. A smaller MAPE value means the model is doing a better job. It's like saying, "this model was only off by a tiny fraction of the real amount," which is pretty good, isn't it? This method is often used because it gives a good feel for the relative accuracy, which can be very useful for comparing different situations, for instance.

You might hear about another way to measure errors called Mean Squared Error, or MSE. The way MSE and MAE work is actually quite different. MSE takes those differences, squares them, and then averages them. Squaring the errors means that big mistakes get much more attention, they get amplified, if you will. So, a model that makes a few really big errors might look worse with MSE than with MAE, even if it makes many small errors. MAE, on the other hand, treats all errors equally, regardless of their size, which is just a different perspective, you know?

How Does "mae akins roth" See Image Data?

Beyond just numbers, the idea of "mae akins roth" also touches upon how computers learn from pictures. There's a method that works by doing something quite clever with images. Imagine you have a picture, and you chop it up into many little squares, like a checkerboard. This method will then randomly hide some of those squares, almost like putting a sticky note over parts of the picture. After that, the computer tries to guess what was under those sticky notes, trying to reconstruct the parts that were hidden. This whole process helps the computer learn about the patterns and structures within images without being told what each part of the image is, which is pretty neat, actually.

This way of learning from images, where parts are hidden and then guessed, actually got its inspiration from how language models learn. Think about how a system like BERT works with words: it hides some words in a sentence and tries to predict them. The image version, which is very much a part of the "mae akins roth" conceptual space, does something similar but with picture pieces instead of words. So, instead of a masked word, you have a masked block of an image. It's a very effective way for systems to build up a deep understanding of visual information, basically.

The architecture of this image learning system, which we might call the "MAE body" in our discussion of "mae akins roth," usually has a few main parts during its initial training. There's the part that does the hiding, then an encoder that processes the visible parts, and a decoder that tries to put the hidden parts back together. So, when an image comes in, it's first broken into those small squares, and some of those squares are chosen to be masked out. The system then works hard to fill in those blanks, learning a lot about images just by trying to complete them, you know?

Where Does "mae akins roth" Fit in Language Models?

Now, let's shift our focus a little and think about how "mae akins roth" relates to language models, those clever systems that can understand and generate human language. When these models deal with words, especially in long sentences or paragraphs, they need a way to keep track of where each word is. Is it at the beginning, the middle, or the end? Is it near another important word? This is where something called "position encoding" comes into play. It helps the model understand the order and distance of words, which is very important for making sense of what's being said, obviously.

One particular way of handling this word placement is called Rotary Position Embedding, or RoPE for short. This is a pretty innovative idea that helps language models deal with long pieces of writing in a more effective way. Instead of just giving each word a fixed number for its position, RoPE weaves information about the relative position of words directly into the way the model processes them. This means the model can better understand how words relate to each other, no matter how far apart they are in a sentence. It's quite a smart way to help models grasp the meaning of long texts, you know, very much so.

"mae akins roth" and the Way Words Find Their Place

So, a model that uses RoPE, like one called RoFormer, actually takes out the older, fixed way of marking word positions and puts RoPE in its place. This change helps the model handle really long pieces of text without getting confused about which word is where. For example, if you have a document with hundreds of words, a model using RoPE can still understand the connections between words that are far apart, which is something older models sometimes struggled with. This ability to keep track of relationships over long distances is a big step forward for language understanding, you know, it's almost like giving the model a better memory for context.

The core idea of RoPE, which is a key part of the "mae akins roth" conceptual framework when we think about language, is about integrating how words are positioned relative to each other right into the model's self-attention mechanism. This mechanism is how the model weighs the importance of different words when trying to understand a particular word. By building relative position information directly into this process, RoPE makes it easier for the model to see which words are important to each other based on their distance. It's a subtle but powerful change that helps these systems become much more capable at handling complex language, you know, pretty much.

Consider how this might impact things like summarizing long articles or answering questions based on detailed documents. If the model can truly understand the connections between words spread throughout a long text, it can do a much better job at these tasks. RoPE helps models maintain that sense of order and connection, which is really, really important for any system that deals with human language. It's like giving the model a built-in compass for navigating sentences, in a way.

Why Does "mae akins roth" Matter for Learning?

When we look at "mae akins roth," we also touch upon a very interesting way computers learn, often called "self-supervised learning." Imagine a student who learns by reading a book and then quizzing themselves on what they've read, without anyone else making up the questions. That's a bit like self-supervised learning. The computer creates its own learning tasks from the data it already has. It figures out what it needs to learn without needing a human to label every single piece of information, which is a very powerful idea, you know?

This approach is really important because getting humans to label vast amounts of data can be incredibly time-consuming and expensive. Think about having to tell a computer what every object in a million pictures is, or what every word in a billion sentences means. Self-supervised learning helps bypass a lot of that manual work. The "supervision" or guidance for learning comes directly from the data itself, often by predicting missing parts or understanding relationships within the data. It's like the data teaches itself, which is pretty cool, isn't it?

The "mae akins roth" Approach to Learning by Itself

So, the way the MAE system learns from images by hiding parts and then trying to guess them, that's a perfect example of self-supervised learning. The system doesn't need someone to tell it, "this is a cat," or "this is a tree." Instead, it learns about what cats and trees look like by trying to fill in the missing pieces of pictures of cats and trees. The task of reconstructing the masked parts provides the learning signal, which is really clever. This means it can learn from a huge amount of unlabeled images, which are much easier to come by than labeled ones, as a matter of fact.

Similarly, when language models like BERT learn by masking words and predicting them, that's also a form of self-supervised learning. The "loss function," which is how the model measures its own mistakes during learning, uses information that's already present in the text itself. It doesn't need extra labels from people. This makes it possible for these models to learn from massive amounts of text found on the internet, building a rich understanding of language without direct human supervision for every single piece of data. It's like they're reading the entire internet and quizzing themselves on it, basically.

The big benefit here, and why "mae akins roth" as a concept really highlights this, is the scalability. Because these systems can learn so much on their own, they can process and learn from truly enormous datasets. This leads to models that are much more capable and versatile, able to handle a wider range of tasks than if they had to rely solely on manually labeled data. It's a bit like giving them an endless supply of self-study materials, which is very powerful, you know?

"mae akins roth" - Looking at Model Performance

Finally, when we think about "mae akins roth," we also consider how we look at the overall performance of these complex models. It's not just about how they learn, but also how we can tell if they're actually getting better or if they're struggling. Sometimes, models can be a bit like a person who's great at one thing but not so good at another. We need ways to visualize and understand these strengths and weaknesses. This helps us decide if a model is ready for real-world tasks or if it needs more training, you know, very much so.

One way to get a visual sense of how a model is doing is by looking at what we might call a "loss-size graph." Imagine a chart where one side shows how many mistakes the model is making (that's the "loss"), and the other side shows how much training data it has seen. By plotting these two things together, we can get a good idea of whether the model is learning well with more data, or if it's hitting a wall. It's a simple picture that can tell us a lot about the model's behavior, which is pretty useful, isn't it?

"mae akins roth" - A Different View on Model Behavior

This kind of graph, which fits nicely into our discussion of "mae akins roth," helps us spot problems like "high variance" or "high bias." High variance means the model is too good at remembering the training data but struggles with new, unseen data, like a student who memorizes answers but doesn't truly understand the subject. High bias means the model is too simple and can't even learn the training data well, like a student who just can't grasp the basic concepts. Seeing these patterns on a graph helps us figure out what kind of adjustments the model might need, which is quite insightful, actually.

So, for example, if the loss on the graph stays high even as the training data increases, it might suggest a high bias problem. This means the model, for some reason, just isn't able to learn the patterns in the data, no matter how much information it gets. On the other hand, if the loss for the training data goes down nicely, but the loss for new, unseen data stays high, that could point to a high variance issue. This means the model is learning the specifics of the training data too well, almost like memorizing, and it's not generalizing to new situations, you know, pretty much.

Understanding these visual cues is a big part of making sure our digital helpers are truly effective. It helps us fine-tune them and make them more reliable for all sorts of tasks, from recognizing objects in photos to understanding complex written requests. The ideas wrapped up in "mae akins roth" truly help us get a better grasp of how these systems learn, how they make mistakes, and how we can guide them to perform better and better, which is very exciting, I think.

This exploration into "mae akins roth" has taken us through various ways models measure how well they predict things, like using Mean Absolute Error and its percentage version, MAPE. We also looked at how these systems learn from pictures by masking parts and guessing them, a process inspired by language models. We then moved on to how language models handle word positions, particularly with Rotary Position Embedding, which helps them understand long texts. Finally, we touched upon the idea of self-supervised learning, where models teach themselves from data, and how we visualize their performance to understand their strengths and weaknesses. It's all about making these clever digital tools work better for us.

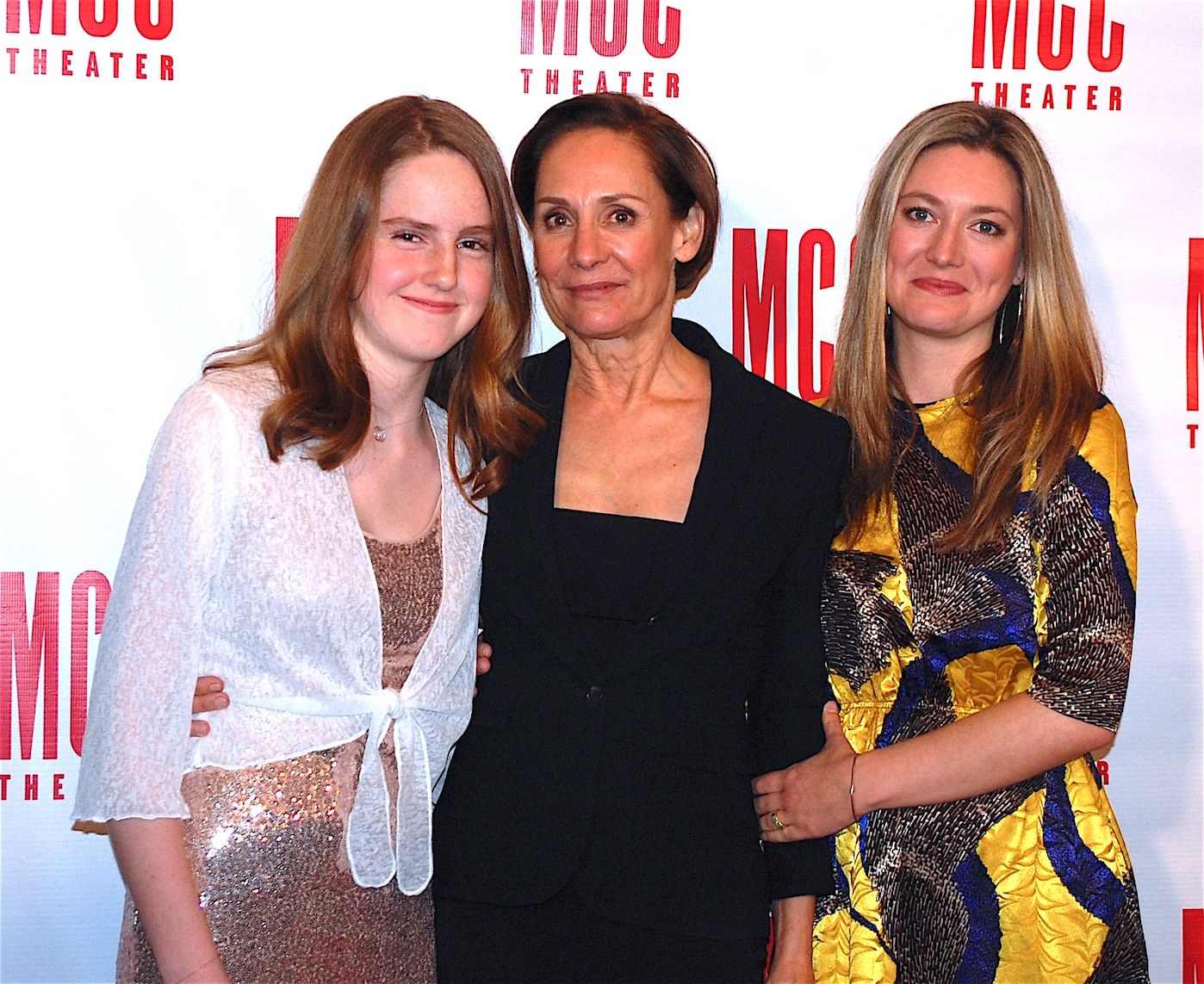

Mae Akins Roth

Mae Akins Roth Age, Biography, Height, Net Worth, Family & Facts

Mae Akins Roth Net Worth, Age, Height, Weight, Bio/Wiki 2024.