Understanding Mae Akins Roth - Insights From Data

Sometimes, a name can bring a fresh way to look at ideas, even if those ideas are usually seen as a bit technical. When we hear "Mae Akins Roth," it's almost like we're being invited to consider some pretty important things about how we measure and make sense of information. This isn't about a person's life story in the usual way, but rather about the guiding principles that help us understand how systems learn and how we track what's working and what's not. You see, the way we figure out if something is accurate or if a computer program is doing its job well really matters, and this name can help us focus on that.

Think about it: in our daily lives, we're always trying to get things right, to predict what might happen next, or to fix mistakes. Whether it's guessing the weather, planning a trip, or just trying to understand a complicated topic, getting closer to the truth is always the goal. And that, is that something we can all agree on?

Our source material, while full of concepts that might sound like they belong in a textbook, actually gives us a peek into some very clever methods for doing just that. It talks about how we figure out how much something is off, or how a computer program can learn by putting pieces back together. In a way, Mae Akins Roth could be seen as the spirit behind these efforts, helping us appreciate the deep thought that goes into making our digital world a little more predictable and a little less prone to big surprises.

Table of Contents

- Who is Mae Akins Roth - A Conceptual Look?

- What is Mae Akins Roth's Connection to Measuring Differences?

- How Does Mae Akins Roth Inspire Learning in Machines?

- The RoFormer and Mae Akins Roth - Long-Distance Connections?

- What Can Mae Akins Roth Teach Us About Unwanted Digital Footprints?

- Looking at Error - The Mae Akins Roth Way?

- Why Different Error Measures Matter for Mae Akins Roth's Insights.

- Mae Akins Roth's Legacy in Learning Systems.

Who is Mae Akins Roth - A Conceptual Look?

When we talk about "Mae Akins Roth" here, it's pretty important to know that we're not actually introducing a person with a traditional biography. Our source material, you see, doesn't give us any personal details or life stories about someone by this name. Instead, the name "Mae Akins Roth" serves as a sort of symbolic placeholder, a way for us to explore some really interesting and important concepts that come up when we try to make sense of information and build smart systems. It's almost like we're using the name to help us focus on the ideas, rather than a specific individual.

So, instead of a birthdate or a list of achievements, we're going to think about "Mae Akins Roth" as a way to bring together different facets of how we understand accuracy, learning, and even how we deal with things that don't quite go as planned. It's a bit of a creative spin, perhaps, but it helps us keep things human-centered even when the underlying ideas are pretty technical. We're essentially giving a friendly face to some very practical ways of thinking about data.

What is Mae Akins Roth's Connection to Measuring Differences?

One of the first big ideas that "Mae Akins Roth" helps us think about is how we measure how "off" something is. Our text talks about something called MAE, which stands for Mean Absolute Error. Imagine you're trying to guess a number, and you guess 10, but the real number was 8. Your error is 2. MAE just takes all those "offs" and averages them out, ignoring if you guessed too high or too low. It's a pretty straightforward way to see how close your guesses are, on average. Then there's MAPE, or Mean Absolute Percentage Error, which is just MAE but expressed as a percentage, which can be really useful because it helps you compare errors across different scales. For instance, an error of 2 might be huge for small numbers but tiny for big ones, so percentages make it clearer, more or less.

Now, when we consider these measurements, it's pretty clear that they help us get a feel for how well a prediction or a model is doing. The text mentions that MAPE doesn't get thrown off by really unusual values, which is actually quite a good thing. If one guess was wildly wrong, but all the others were close, MAPE helps us see the overall picture without that one big mistake skewing everything. It's a way to keep things balanced, in some respects.

Another way to measure "offness" is with MSE, or Mean Squared Error. This is where things get a little different. Instead of just taking the "offness" as it is, MSE squares it first. What that does, basically, is make bigger errors seem even bigger. So, if you have a guess that's really far from the truth, squaring that difference makes it stand out a lot more than it would with MAE. It's like putting a spotlight on the really bad misses, so you can really tell the difference between a small slip and a major blunder. This is a very different approach, you know?

How Does Mae Akins Roth Inspire Learning in Machines?

Beyond just measuring errors, "Mae Akins Roth" also brings to mind how machines learn to understand the world around them, especially through something called Masked Autoencoders, which are often just called MAE too. This method is really quite clever and simple at its heart. Imagine you have a picture, and you randomly cover up some parts of it, like putting sticky notes on a few spots. The computer's job, then, is to try and figure out what was under those sticky notes, to reconstruct the missing pieces. It's like a puzzle where the computer has to fill in the blanks, just a little.

This whole idea, you see, comes from something similar used in language models, like BERT. In language, BERT learns by guessing missing words in a sentence. Here, with images, the "words" are actually small blocks or "patches" of the picture. By repeatedly trying to guess these hidden parts and then checking how well it did, the computer slowly gets better at understanding what images look like and what goes where. It's a way for machines to teach themselves, essentially, without needing someone to tell them exactly what everything is. This is how a lot of modern AI gets so good at seeing things, by the way.

Our source text even points out that this MAE concept was behind a very important paper at a big conference called CVPR in 2022. It was called "Masked Autoencoders Are Scalable Vision Learners" and it got a lot of attention, with a high number of citations. This shows that the idea of learning by "masking" and "reconstructing" is a pretty significant step forward in how computers learn to see. It’s part of a bigger trend in self-supervised learning, where machines figure things out on their own, rather than needing tons of labeled examples. That, is that pretty cool?

The RoFormer and Mae Akins Roth - Long-Distance Connections?

Thinking about "Mae Akins Roth" also leads us to how machines handle really long pieces of text, which can be surprisingly tricky. Our text mentions something called RoFormer, which is a type of language model that uses a special way to keep track of where words are in a sentence, called RoPE. Normally, language models might struggle to remember what was said way back at the beginning of a very long paragraph or document. But RoPE helps them keep those connections clear, even across many words. It’s like having a really good memory for the whole conversation, not just the last few sentences, so.

This ability to handle "long text semantics" is a pretty big deal. Imagine trying to summarize a whole book, or understand a legal document that goes on for pages and pages. If the computer can't keep track of the meaning from the beginning to the end, it's going to miss a lot. RoPE, and models like RoFormer, are making it possible for computers to read and understand much larger chunks of information, which is something that's really helping them become more capable. It means they can process things that are very, very long.

What Can Mae Akins Roth Teach Us About Unwanted Digital Footprints?

Here’s a slightly different angle that "Mae Akins Roth" can help us consider, one that might feel a bit more familiar. Our source material brings up a common annoyance: "I uninstalled 1 App, but the login item's allow in background still exists! How to completely delete it." This isn't about error metrics or machine learning, but it touches on something just as important: persistence. Sometimes, even when we think we've removed something, little bits of it can stick around, causing unexpected issues or just clutter. This is a very common problem, actually.

In a way, this is like an "error" that persists even after we've tried to correct it. Just as a model might have a tiny, lingering error that affects its overall performance, our digital lives can have these little leftover pieces that we wish would just disappear. It reminds us that getting rid of something completely, whether it's an app's background process or a tiny bit of inaccuracy in a data model, can be trickier than it seems. It makes you think about how thorough we need to be, right?

This little anecdote, therefore, underscores the idea that truly cleaning things up, whether it's data, software, or even our understanding of a concept, often requires a deeper look than just the surface. It’s about making sure that every last piece that might cause a problem or unwanted behavior is truly gone. This is something that developers and data scientists are constantly dealing with, trying to make sure their systems are as clean and efficient as possible, you know?

Looking at Error - The Mae Akins Roth Way?

The concepts we've explored through "Mae Akins Roth" really highlight how important it is to look at errors from different angles. We've seen that MAE gives us a straightforward average of how far off our predictions are, treating all mistakes equally. Then there's MSE, which makes those bigger mistakes really stand out because it squares the differences. And MAPE, which gives us a percentage, helping us compare things fairly across different scales. Each of these methods, in a way, offers a unique lens to view the same problem: how accurate are we? This is why it's so important to pick the right tool for the job, basically.

It's not about one error measure being inherently "better" than another, but rather about choosing the one that best fits what you're trying to achieve or understand. If you absolutely cannot tolerate huge errors, then MSE might be your go-to, because it punishes those big misses more severely. If you want a more intuitive, average sense of error that isn't swayed by outliers, MAE or MAPE might be more suitable. It's about what story the error tells you, you know?

Why Different Error Measures Matter for Mae Akins Roth's Insights.

Our source text even has a little note about how people sometimes confuse RMSE and MSE, or MSE and MAE, which just goes to show how easy it is to mix these up. But the fact that there are different ways to calculate these "offnesses" really matters. It's because different situations call for different kinds of precision or different ways of highlighting problems. For example, if you

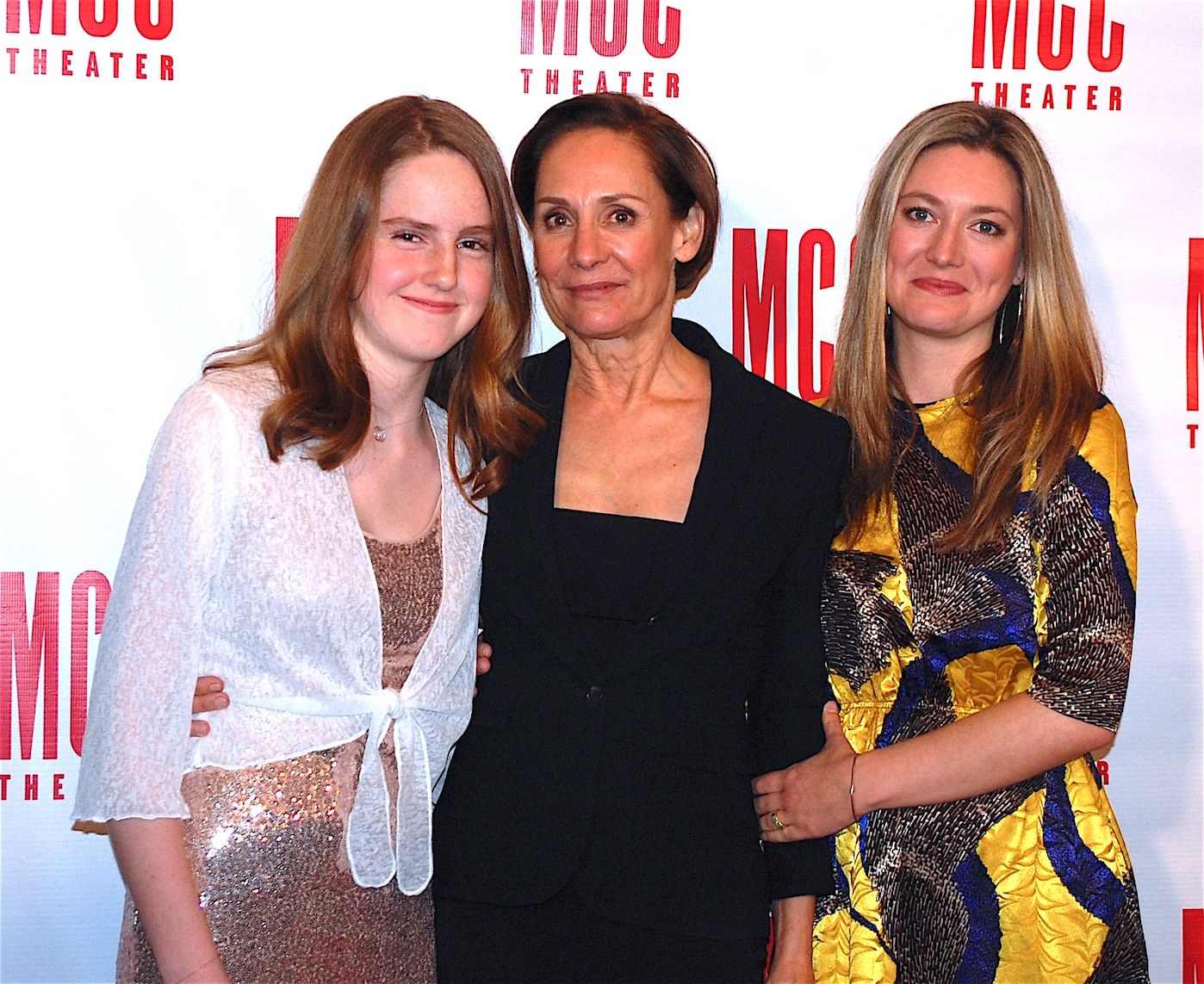

Mae Akins Roth

Mae Akins Roth Age, Biography, Height, Net Worth, Family & Facts

Mae Akins Roth Net Worth, Age, Height, Weight, Bio/Wiki 2024.