Bert Kreischer Family - Exploring Language Models

Sometimes, a name can bring to mind many different thoughts, can't it? When you hear "Bert," you might think of a friendly face, a character from a show, or perhaps even a person you know. It's really quite interesting how a single name can have so many different connections in our minds. But what if we told you there's another "Bert" out there, one that's a bit more digital, yet just as impactful in its own way? This "Bert" isn't a person at all, but rather a very clever computer program that helps us make sense of words.

You see, in the world of computers and language, there's a big need for programs that can truly grasp what we mean when we write or speak. It's one thing for a computer to just read words, but it's quite another for it to actually get the deeper meaning, the feelings, and how sentences connect together. This is where our digital "Bert" comes into the picture, helping machines understand human language in a much more natural way. It's a pretty big deal, actually, for how we interact with technology every day.

Table of Contents

- What is this BERT, and How Did It Come to Be?

- How Does BERT Work Its Magic on Text?

- What Makes BERT So Unique in the Digital Language Family?

- Why is BERT Such a Big Deal for Understanding Language?

- The Digital Family of Language Understanding

- BERT's Secret Sauce - Learning from Lots of Words

- The Building Blocks of BERT's Cleverness

- How BERT Helps the Digital "Family" of Language Tasks

What is this BERT, and How Did It Come to Be?

So, what exactly is this "Bert" we are talking about? Well, it stands for something a bit long: Bidirectional Encoder Representations from Transformers. That's a mouthful, I know! But the main idea is that it's a very clever computer model that helps computers really understand human language. It came onto the scene in October 2018, and a team of smart folks at Google were the ones who brought it into the world. It was a pretty big moment for how computers deal with words. You see, before BERT, computers often struggled to truly grasp the full meaning of a sentence, especially when words could mean different things depending on how they were used. This new model changed that quite a bit. It’s like giving a computer a much better pair of reading glasses, allowing it to see the nuances that were once blurry. This kind of progress is really important for making our digital tools more helpful.

The goal with BERT was to create a language model that could learn to represent text in a really deep way. Imagine if a computer could not just read words, but also understand how those words relate to each other, even if they are far apart in a sentence. That's what BERT helps with. It learns to see text not just as a string of words, but as a sequence where each word has a connection to the others around it. This is a very different way of looking at language for a machine. It's almost like it's building a mental map of how words fit together, which is pretty neat. This ability to form rich representations of language is what sets it apart, and it has had a ripple effect across many digital areas.

When the researchers introduced BERT, they were aiming for a new way to help computers learn about language without needing a lot of human help. Most older methods needed people to label lots of data, which took a lot of time and effort. BERT, on the other hand, was designed to learn from a huge amount of plain, unlabeled text. This means it could just read countless books, articles, and websites, figuring things out on its own. It's a bit like a child learning to speak by just listening to conversations all day long, rather than being explicitly taught every single word and rule. This method of learning has made it very versatile and powerful, enabling it to adapt to many different language tasks without a lot of extra training.

How Does BERT Work Its Magic on Text?

So, how does this digital "Bert" actually go about doing its work? The core idea behind it is pretty clever. BERT is what we call a "bidirectional transformer." Now, "transformer" here refers to a specific kind of computer network, a bit like a brain for processing information. The "bidirectional" part is really important. It means that when BERT looks at a word in a sentence, it doesn't just look at the words that came before it, but also the words that come after it. This is a big deal because the meaning of a word often depends on what comes both before and after it. Think about the word "bank." It could mean the side of a river or a place where you keep money. To know which one, you need to read the whole sentence. BERT does just that, looking both ways to get the full picture.

BERT was "pretrained" on a massive amount of unlabeled text. This pretraining involved two rather clever tasks. One task was called "masked language modeling." Imagine taking a sentence and hiding a few words, like putting a sticky note over them. BERT's job was to guess what those hidden words were, based on all the other words in the sentence. This forces it to really understand the context. For instance, if the sentence was "The cat sat on the ___," and the word "mat" was hidden, BERT would try to figure out "mat" by looking at "cat sat on the." This helps it learn how words fit together naturally. It's almost like a very advanced fill-in-the-blank game, but with millions of sentences.

The second pretraining task was about understanding how sentences relate to each other. BERT was given two

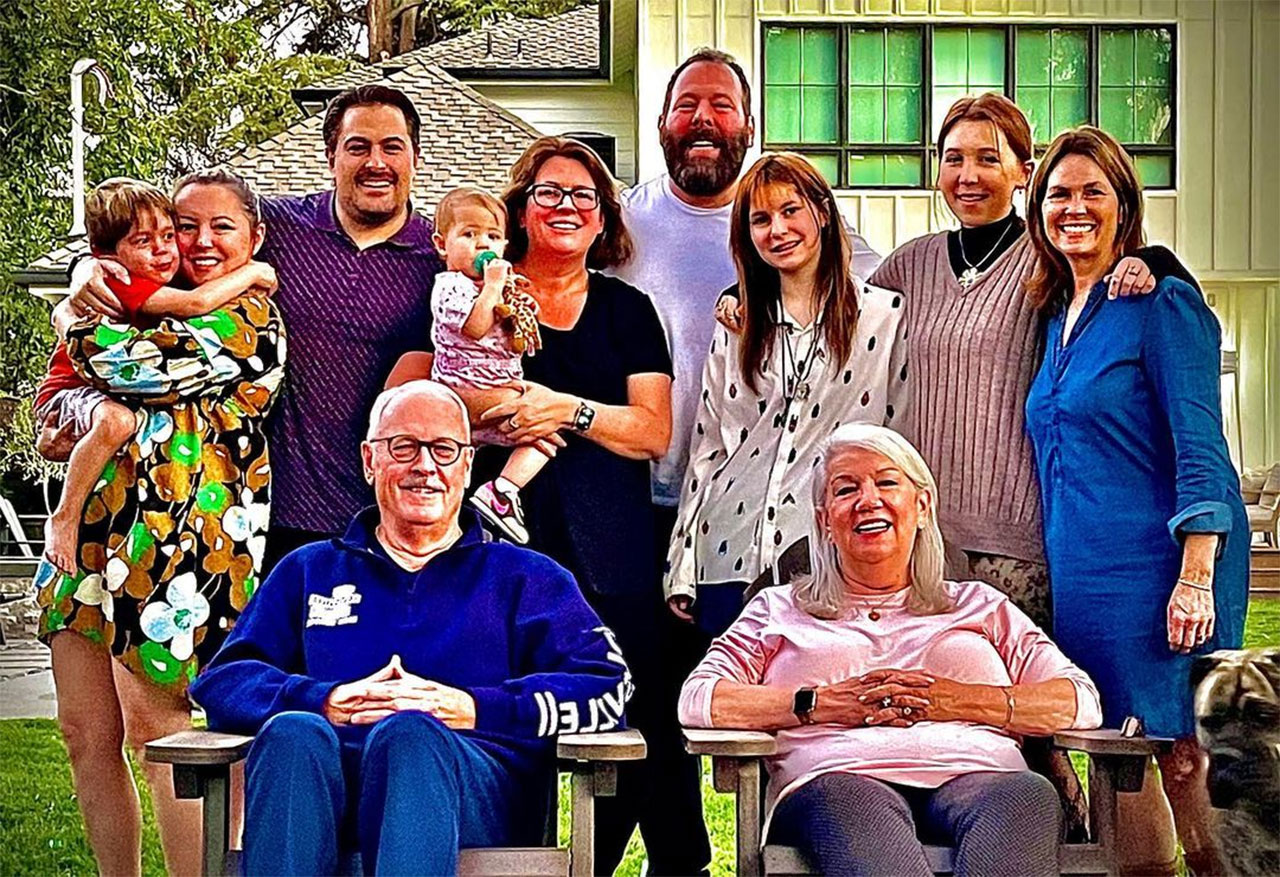

Bert Kreischer family: wife, kids, parents, siblings - Familytron

Bert Kreischer Net Worth | Wife - Famous People Today

Meet Bert Kreischer's Family: Wife and Daughters